Tools

Test Coverage

Ensuring our code works as intended

Overview

We have a few testing methods within the ink ecosystem:

Each uses a different framework so it's important to determine which kind of testing is appropriate for your use case

A note about ink environments

The ink ecosystem contains several environments:

Our end-to-end and visual regression tests both interact with the Exposé environment

Unit Tests

Testing Library is the preferred choice but many tests were written in Jest and Enzyme. Regardless of the library, unit tests are predominantly used to test non-visual component behavior and React hooks.

When to write unit tests

- To test an individual isolated "unit" of code. Unit tests can be run independent of their test suite, without a need for other "units" to be complete

- To mock interactions with external dependencies (such as libraries, databases, etc)

- To test a method's input and output

- To verify whether a method impacts a component's state

Best practices

Tests for each component should live in a <component name>.spec.tsx file within the same folder of the component

❌ Don't

Don't reference CSS IDs or class names. CSS identifiers of Ink components are subject to change without warning. Majority of our components are now styled which uses a dynamic and unique identifier. Referring to these identifiers in other code, such as unit tests, makes the other code more fragile.

- Don't use snapshots to test html outputs

- Don't add a test for test sake! Each test has a purpose

✅ Do

Each test within a suite should test a single function or component behavior. Use selectors that resemble how users interact with the code, such as labels and content text. Read more here or here.

- Use

describeto group tests with similar aspects- For example

structure,behavior,callbacks, etc - One or two words are enough to describe the concept being tested

- For example

- Use

itas the test executable but don't use thetestkeyword- Generally a short sentence to describe the expected result of an assertion

- Use

arrange,actandassertpattern to write your unit tests- Group statements accordingly with an empty line between them

- Prefer

mount()overshallow()

Example

Button.spec.tsx

How to run unit tests locally

To run the entire test suite:

To see the tests coverage as well as the tests results:

To run a specific component/test suite, pass a pattern to the command such as the name of a component or the path to the file you're trying to run:

End-to-end testing

We use Cypress

When to write end-to-end tests

- To test the execution of a component from start to finish

- To mimic real-world scenarios and user interactions

- To test communication between components and the database, APIs, network, and libraries

- To test accessibility as the engine "sees" the same things that a screen reader would (for the most part)

Best practices

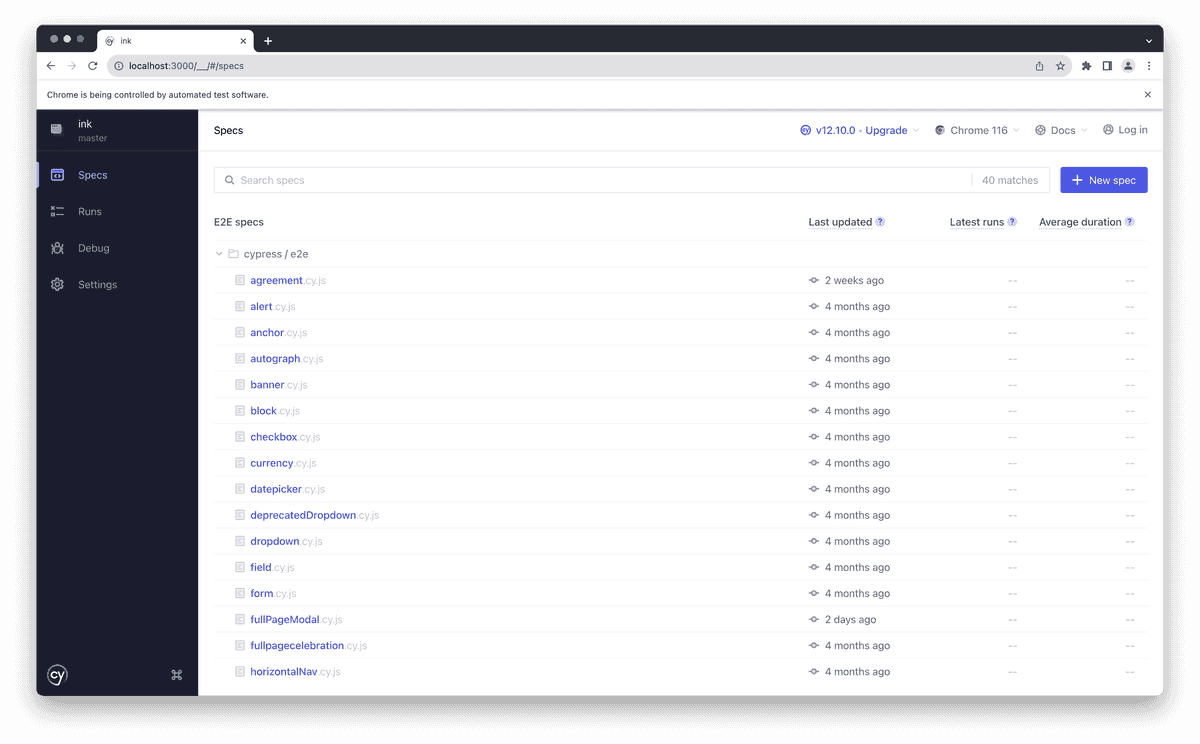

All tests are named as <component name>.cy.js and live within the cypress folder. We use this to replicate expected user behavior and all tests are ran against the Expose environment.

❌ Don't

Don't reference CSS IDs or class names. CSS identifiers of Ink components are subject to change without warning. Majority of our components are now styled which uses a dynamic and unique identifier. Referring to these identifiers in other code, such as unit tests, makes the other code more fragile.

- Don't test components that are purely visual

- Don't use for projects that don't render a UI, such as custom hooks

✅ Do

Use selectors that resemble how users interact with the code, such as labels and content text

- Make assertions and use

.should()to do it - If you can't find an element using the component name, use the attribute

data-testid- Example:

cy.get('table[data-testid="your-data-testid"]')

- Example:

- Use

describeto group tests with similar aspects- For example

structure,behavior,callbacks, etc - One or two words are enough to describe the concept being tested

- For example

- Use

itas the test executable but don't use thetestkeyword- Generally a short sentence to describe the expected result of an assertion

Example

cypress/e2e/newTable.cy.js

How to run Cypress locally

Cypress tests run against the Expose, so, we must run Expose:

Assuming you are running Expose on port 3000, you'll open a second terminal window to run Cypress:

If you want to run Cypress against a different port, you can modify using:

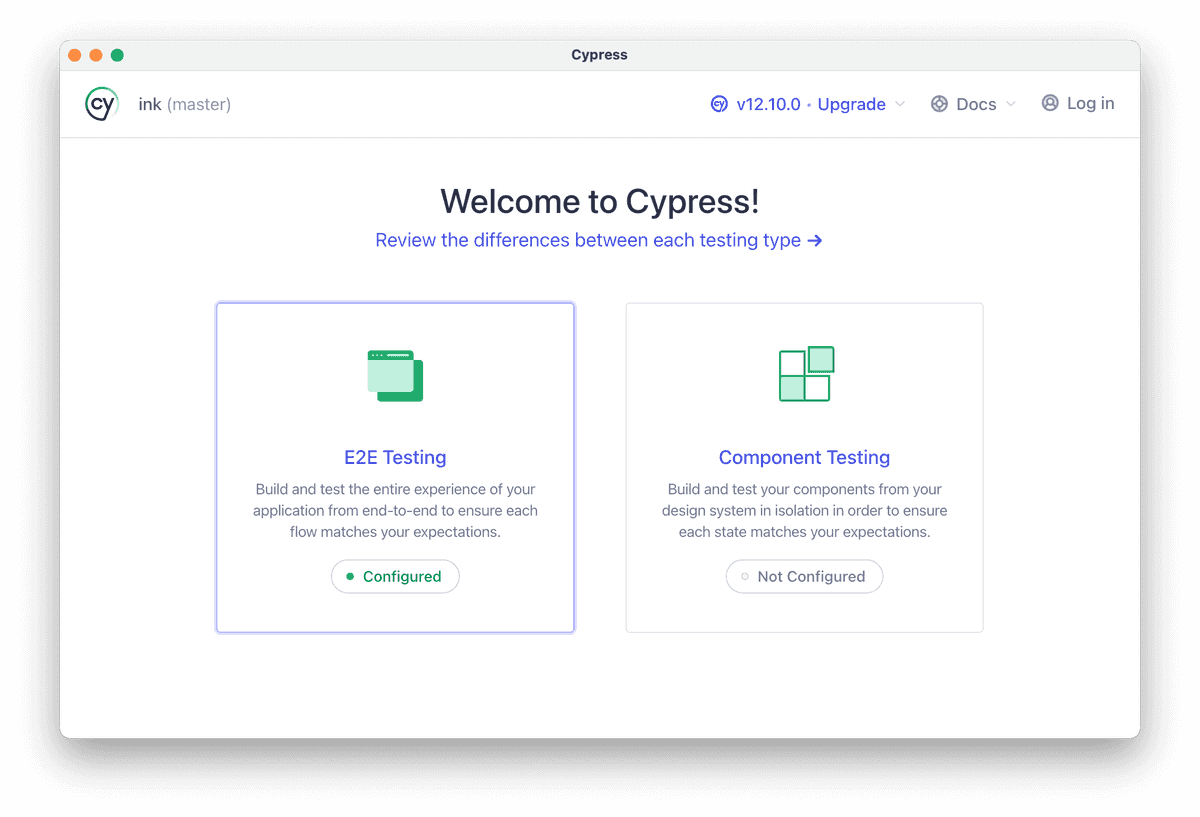

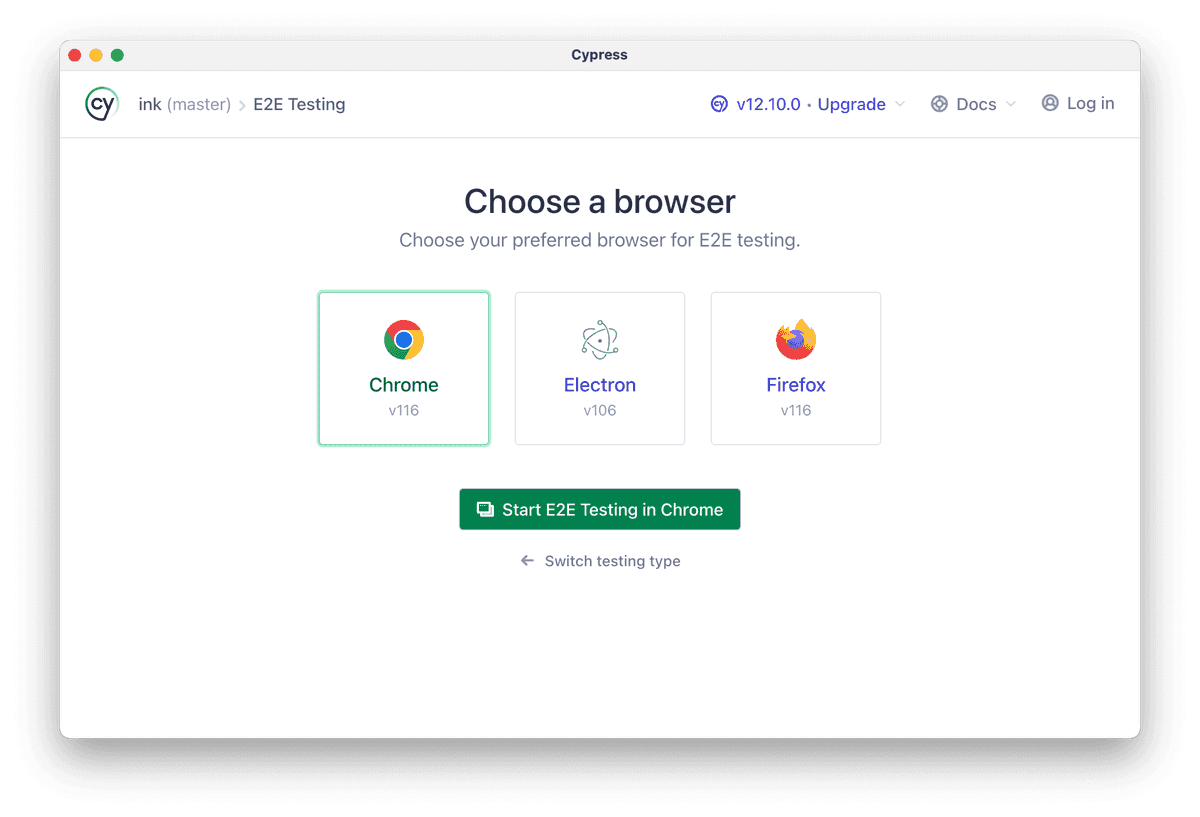

The Cypress screen will present you some choices:

Cypress tests are live. If an errors occur, cypress will show where the problem is. It can also automatically re-run tests if you leave it open and modify a component's code. However, this doesn't work with samples are samples need to be scraped so Expose must be restarted.

Note: sometimes tests ran in CircleCI yield different results as CircleCI is running tests in "production" mode

Visual Regression

We use BackstopJS to collect sample snapshots from our internal testing environment, Exposé. The goal is to cover as many visual variants in each component as possible through atomic sampling allowing us to check for cascading visual effects.

When to write visual regression tests

- When a component has visually changed in any way

- To test visual interactions such as opening dropdowns, clicking buttons, selecting checkboxes, etc

We are predominantly looking for unexpected changes to the visuals

Best practices

There are only two ways to generate a snapshot;

- All Exposé sample snippets snapshots on load (default state)

- Additional scenario tests added to the corresponding

<component name>.backstop.jsonwithin the backstop-references folder

Naming test scenarios

Names of each scenario must be unique!

Note: Exposé sample snippets and backstop scenarios (added via .backstop.json) don't conflict as they are namespaced to their respective environment

Our custom schema

We use a custom schema to make matching our Exposé sample snippets easy. A script is ran in the background to convert it to Backstop's format.

sampleName: references the exact sample name in thesamples.jsfile.state: indicates the state of the sampleselectors: required only if it's not the sample name, such as 'viewport'

Example

Let's say our NewTable component has 2 samples, "Base NewTable" and "NewTable with twiddle rows"

NewTable/samples.js

However, this only snapshots the initial state.

If we want to snapshot additional visual checks for the scenario "NewTable with twiddle rows", we need to add them as follows:

NewTable.backstop.json

Unfortunately this task isn't automated so if you update a sample name, you must also update the corresponding backstop scenario names.

Breakpoints

Similar to how we can set different environments[] in each sample snippet, we use the key breakpoints[] to include snapshots at various screen sizes.

By default, we snapshot at the desktop size. Additional options include:

Technical note: Backstop uses viewports but to align ourselves between sample snippets and additional scenarios, breakpoints is used in both places

Example

We know that Autograph's "Sign here" flag hides itself in mobile only, so we will add a snapshot to each breakpoint available to ensure that's the case:

Autograph/samples.js

Skipping sample snapshots

There are only two reasons to use a skip:

- The test is flaky

- with

skipFlakyBackstop: true - If this flag is used, add a comment with context and links if applicable. File a Jira ticket so we can circle back to fix the regression test.

- with

- The initial testing state is irrelevant

- with

skipInitialBackstop: true

- with

Example

We usually need a trigger to open a component like Modal. We don't need to screenshot the trigger itself (in this case a Button), so we can skip the initial state:

Modal/samples.js

Modal.backstop.json

Scenario options

Here are a few options we commonly use:

See Backstop's documentation for all available options

Consistent capture

We want our snapshots to be consistent which means we have to simulate the test to hold the given state (e.g. hover, active, focus) on capture. Sometimes this requires modification to ignore focus rings or animation delays in order to not produce flaky tests.

- Appending an outside selector at the end of

clickSelectors[]to prevent focus rings. For example, all samples display auseWindowWidth()value in Expose. This is currently asmallelement which we double as a way to click outside the sample. - A

scrollToSelectorselector might be needed when used withviewportsnapshots to consistently snapshot the sample at the same position on a given page - Add a

postInteractionWait(can be a selector or a time in milliseconds, none by default) to delay capture if a component requires multiple clicks or an animation to complete - Add a

delay(none by default) as a last resort

Custom Exposé page

We have a few custom exposes to help capture design issues spanning across multiple components such as the Disabled to prevent color inconsistently. To add backstop scenarios, we must first add the Expose manually before adding a corresponding backstop file.

Example

- Create

DisabledExpose.tsxand add tosrc/Expose/extra-exposes - Add

Disabled: []to thesamplesobject insrc/Expose/helpers/getSamples.ts, - Add the route

<Route exact path="/Disabled" component={DisabledExpose} />tosrc/Expose/Expose.tsx - Create

Disabled.backstop.jsonand add tosrc/Expose/backstop-referencesbefore adding scenarios as normal

How to run Backstop locally

⚠️ Although the idea of Backstop is to test against a reference, we can run a singular copy for sanity checks such as naming issues. The local snapshot doesn't produce the same capture as the one ran in CI.

(No comparison) Snapshot for debugging purposes

Must be running Expose first

(Optional, in a separate terminal window) To skip rebuilding each time there are sample changes:

In a separate terminal window, run backstop (with desired flags)

(Comparison) Test against local master as a reference

The reference can actually be any branch but using master as the example:

After all reference snapshots are created, you can stop the Exposé and switch to the branch you need to compare:

Running selective tests with --filter

Only needs a partial match against the filename which contains:

- Component name

- Kebab case of the sample's name (or

sampleName) viewportsvalues- Expose samples (query

-expose) - Backstop scenarios (query

-backstop)

Examples

(using local)

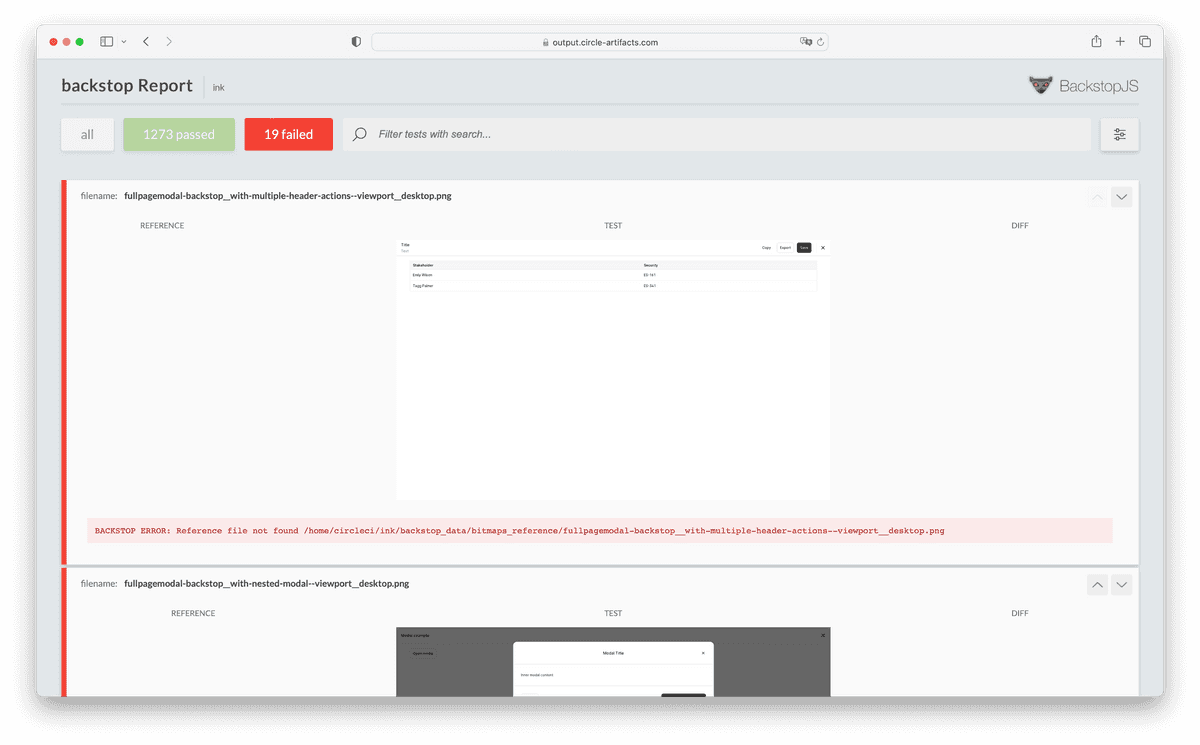

Failing tests

Valid failing tests:

- New samples because there was no prior reference

- Modifications to a sample's name will generate a new filename (this is used to make snapshots unique)

Always check the report (local or on CI) and review each failing test. If you don't know why something are failing, ask for help.

Example of failing a Backstop suite:

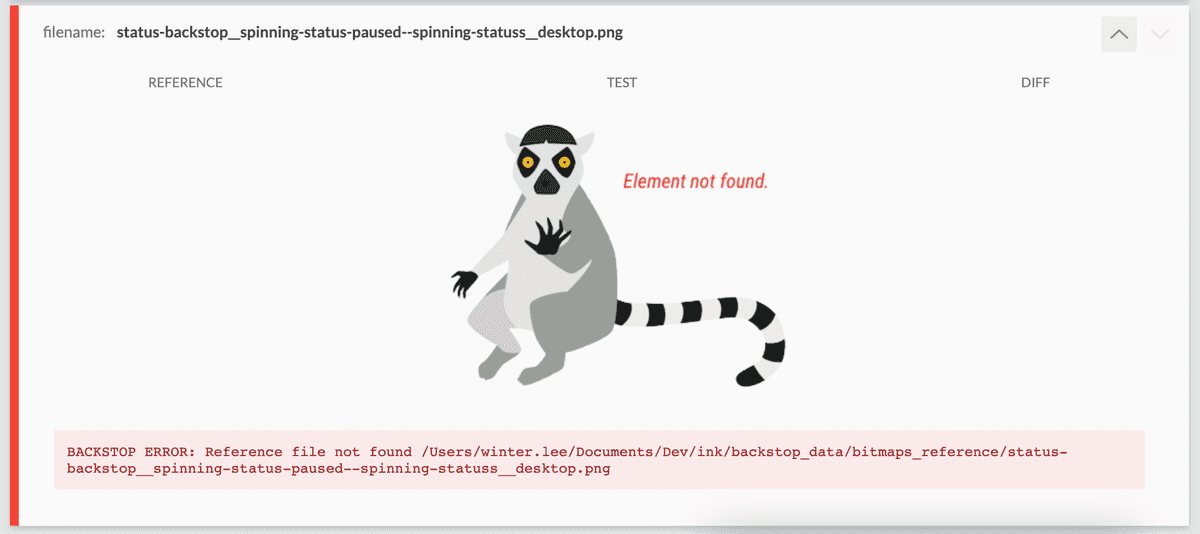

Example of a misspelled selector will bring up a badger:

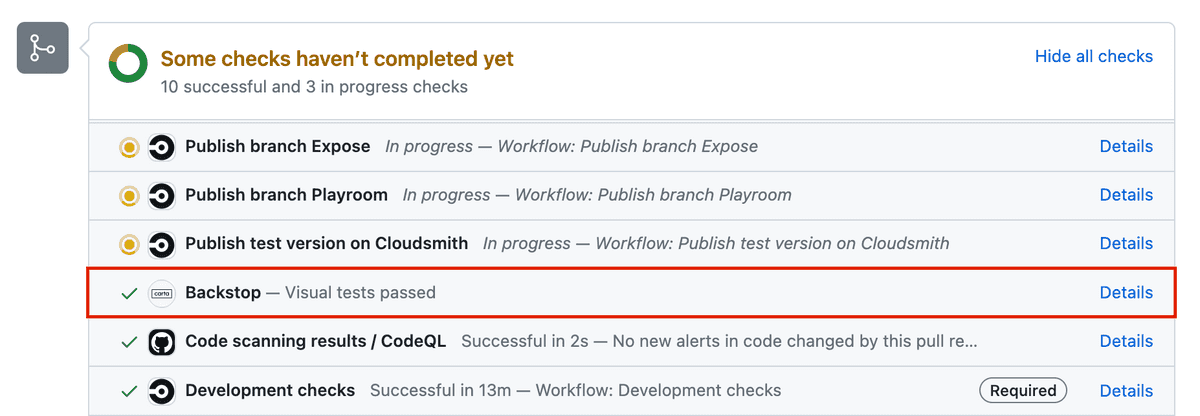

Approving failing Backstop in the CI

If tests are failing due to intentional changes, you must approve the whole suite (to be used as the next reference) before merging, in your local terminal on your branch locally:

⚠️ Important! Never merge a failing test without checking

This generates a commit automatically and you will have to push it up to your branch for Backstop to rerun. It should pass the check. If it doesn't, there's probably a flaky test.

Courtesy queue

⚠️ It's best to check Slack to see if there's currently a merge going on at #ink-backstop

Backstop flakes were more common before but why not avoid having to re-approve backstop if someone merges a second before you (only applies if their branch updates the Backstop reference).

Sometimes we have have multiple branches that all need a Backstop approval so to avoid merging traffic, send a message with claim_backstop where a bot will declare your intentions. Once the PR is merged, use unclaim_backstop to let others know they can proceed with approving their branch's Backstop.

Table of Contents

Is this page helpful?